By Steve Endow I had a call with a consultant who was having trouble unlocking a SQL Server login. While trying to login to GP Utilities with the sa login, he used the wrong password and ended up locking out sa. Not a big deal, but when he went into SQL Sesrver Management Studio to unlock the sa account, he received this message: "Reset password for the login while unlocking" I never use the SQL password policy option on my development SQL servers, so I never lock out accounts, but apparently...(read more)![]()

↧

Unlocking a SQL Server login without resetting the password

↧

GP 2013 R2 changes to User Setup window

By Steve Endow I installed GP 2013 R2 last week and am slowly encountering a few changes and new features. One change that jumped out at me was the redesigned User Setup window. GP 2013 SP2: GP 2013 R2: Obviously the R2 window has the rather "prominent" ribbon at the top, but aside from that global change in R2, the User Setup window has a few interesting additions. User Type: Full vs. Limited. A Limited user is apparently "restricted to inquiries and reports" only. And if the...(read more)![]()

↧

↧

Help!!! My Dynamics GP SQL Server is out of disk space!!!

By Steve Endow ZOMG! Your SQL Server only has 8 MEGABYTES of disk space left!!! What do you do??? Obviously, there are many reasons for a full disk--maybe there are extra database backups, or a bunch of...(read more)![]()

↧

Project Types in Dynamics GP- Not Always What They Seem

My entry point to Dynamics GP, about 14 years ago, was Project Accounting. It was the first I learned, and I lived the lessons of how hard it is change course once you have something set up. In my career as a consultant, I have witness many a Project Accounting implementation gone awry. And in my naive younger days I would judge the prior consultants, thinking how they must not have understood how Project works. But over time, I have come to understand that the issue often is a matter of definitions and communication.

When I sit down and ask a client to describe their projects, they will often use terms like "time and materials" or "fixed price" or "cost plus". And it can be tempting in those moments to immediately correlate that to the GP terms. I mean, they are identical, right? GP has Fixed Price, Cost Plus, and Time and Materials projects. But, hold up...

When clients/users describe their projects, they often are talking in terms of how they bill the project (and the corresponding contract terms). While in GP, the difference in project types is more about revenue recognition and how billing amounts are calculated. So it is important to dive deeper in to the project type discussion to avoid setting up unnecessarily complicated projects.

Let's break it down by the attributes of each project type, starting with most complicated to least (in my humble opinion)...

Cost Plus

As you read through the list, I want you to note specifically the impact of revenue recognition and billing on the project type. If you do NOT recognize revenue on a percent complete, if you do it when you bill...then your projects may be Time and Materials by GP standards. If you bill specific amounts at specific times, rather than progress bill based on percent complete, then your projects may be Time and Materials by GP standards. Perhaps you need to recognize revenue over time, not based on budget -vs- actual, then you may be a Service Fee on Time and Materials in GP.

The lesson here is to not take the terms at face value, and to make sure you understand the impact of the choice in GP (not just what you call it internally, or per the contract terms).

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a senior managing consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.

![]()

When I sit down and ask a client to describe their projects, they will often use terms like "time and materials" or "fixed price" or "cost plus". And it can be tempting in those moments to immediately correlate that to the GP terms. I mean, they are identical, right? GP has Fixed Price, Cost Plus, and Time and Materials projects. But, hold up...

When clients/users describe their projects, they often are talking in terms of how they bill the project (and the corresponding contract terms). While in GP, the difference in project types is more about revenue recognition and how billing amounts are calculated. So it is important to dive deeper in to the project type discussion to avoid setting up unnecessarily complicated projects.

Let's break it down by the attributes of each project type, starting with most complicated to least (in my humble opinion)...

Cost Plus

- Fee plus allowable (billable) expenses

- Can include project fee (lump amount), retainer fee (amount paid in advance) or retention (amount withheld from billing)

- Billing calculated as percent complete (Forecaster vs Actual) based on profit type of budget items

- Revenue Recognition calculated per accounting method (Based on percent complete, or when project completed). Will not recognize when billed.

- Fixed fee only

- Can include project fee (lump amount), retainer fee (amount paid in advance) or retention (amount withheld from billing)

- Billing calculated as percent complete (Forecaster vs Actual) based on profit type of budget items

- Revenue Recognition calculated per accounting method (Based on percent complete, or when project completed). Will not recognize when billed.

- Can include both fees allowable (billable) expenses

- Can include project fee (lump amount), retainer fee (amount paid in advance) or service fee (fee amount recognized over time)

- Billing is calculated based on profit type of budget items and scheduled fee dates (not percent complete)

- Revenue is recognized on expenses and project fees based on the accounting method, either when the expense is posted or when it is billed (not percent complete)

- Service fees are the only feature of Time and Materials projects to require revenue recognition, and they recognize based on duration (you enter a start and end date for the fee)

As you read through the list, I want you to note specifically the impact of revenue recognition and billing on the project type. If you do NOT recognize revenue on a percent complete, if you do it when you bill...then your projects may be Time and Materials by GP standards. If you bill specific amounts at specific times, rather than progress bill based on percent complete, then your projects may be Time and Materials by GP standards. Perhaps you need to recognize revenue over time, not based on budget -vs- actual, then you may be a Service Fee on Time and Materials in GP.

The lesson here is to not take the terms at face value, and to make sure you understand the impact of the choice in GP (not just what you call it internally, or per the contract terms).

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a senior managing consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.

↧

Oldie But Goody- Users When Logging in to Test Companies

This is a pretty common issue, but I thought it was worthwhile to post a summary of the issue and the (relatively) easy fix.

First, the scenario...

So, it's a relatively easy three part fix...(as always test this out, have a backup, yadda yadda yadda)

![]()

First, the scenario...

- You set up users in GP (Admin Page-Setup-User)

- You don't want users accidentally testing in the live company, so you limit their access to the Test company only (Admin Page-Setup-User Access)

- This works great until you refresh the Test database with a copy of the Live database

- Now the users can't access the Test company, and receive a variety of errors on the SY_Current_Activity table

So, it's a relatively easy three part fix...(as always test this out, have a backup, yadda yadda yadda)

- In SQL Server Management Studio, first expand the Test company database, then expand Security, then expand Logins. Make sure the user in question is NOT listed. If they are, delete the user.

- Next in SQL Server Management Studio, expand the overall Security folder, and expand Logins. Right-click on the affected user and choose Properties. Then click on the User Mapping page. Unmark the access to the Test database in the upper part of the User Mapping window.

- Log in to GP, and navigate to Admin Page-Setup-User Access. Select the affected user, and remark the access to the Test company

- Give users access to both the live and test versions of companies (not just the test versions), Admin Page-Setup-User Access.

- Control their ability to enter in to the Live company by not giving them a security role, Admin Page-Setup-User Security.

↧

↧

Dynamics GP Web Services does not support importing sales taxes for SOP Sales Orders

By Steve Endow

I just spoke with a customer who is trying to use Dynamics GP Web Services to import SOP Sales Orders.

He needs to import header level taxes for the sales orders, but was unable to do so--he would get an error about the amount being incorrect. He had read a forum post indicating that Web Services did not support sales taxes, so he didn't know if he was doing something wrong with his import, or if it was a limitation of Web Services.

I recommended that he contact support, as that would be the quickest way to either determine that sales taxes were not supported, or find the issue with his import.

He contacted MS support and confirmed that Dynamics GP Web Services does not support the import of sales taxes for Sales Orders. Apparently this is true for GP 10, 2010 and 2013. He was told that Web Services does support the import of sales taxes for SOP Invoices, but not for SOP Sales Orders.

I'm assuming there is some reason for this, but it seems baffling. eConnect supports the import of sales taxes for orders, so I don't understand why they wouldn't produce similar support for orders. And why Invoices are supported but Orders are not is similarly puzzling.

I guess that's one more reason why I won't be using Web Services. I'm just not a fan.

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

↧

Importing Dynamics GP AR Apply records using SQL

By Steve Endow

I was working with a customer this morning who had recently imported and posted 2,800 AR cash receipts. Only after posting the cash receipts did they realize that the payment applications for those cash receipts did not import.

Since Integration Manager doesn't allow you to import just payment applications, we had to come up with a way to import the 2,800 AR payment applications.

A few years ago, I developed an eConnect integration that imported AR cash receipts and automatically applied them to open invoices. But since the client already had the payment application data, that was overkill--we just needed a way to import the applications without any additional fancy logic.

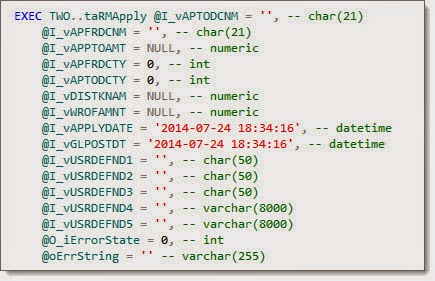

Rather than deal with a .NET application, I figured we could just use the eConnect RM Apply stored procedure--taRMApply. If we imported the AR payment application data into a SQL staging table, a SQL script could loop through the apply records and call the taRMApply procedure for each. Sounded easy, but I was afraid there would be a catch somewhere.

So I created an initial SQL script that would read the apply data from the staging table into a cursor, loop through that cursor, and call taRMApply. It turned out to be surprisingly simple and clean. And I was able to easily update each record of the staging table to indicate whether the apply was successful, and if not, record the error from eConnect.

Here is the SQL Script that I created, which I have also shared up on my OneDrive in case you want to download it instead.

The script assumes you have a staging table with a unique row ID, the necessary apply from and apply to values, and an imported flag field and an importstatus field. You can adjust the table names, fields, and field names to suit your needs.

One last note--the ErrorString output from the taRMApply procedure is going to be an error number. You can lookup that number in the DYNAMICS..taErrorCode table to get the related text error message.

You will want to test this yourself, and make sure to perform an initial test on a Test database first, but it appears to have worked well. It took maybe a minute or or two so to apply the 2,800 payments based on an initial test, so it appears to be fairly fast.

To my pleasant surprise, the process went smoothly, and other than a small error in the script that we quickly fixed, it worked well.

If you find any errors in the sample script below, or have any suggestions for improving it, please let me know.

![]()

I was working with a customer this morning who had recently imported and posted 2,800 AR cash receipts. Only after posting the cash receipts did they realize that the payment applications for those cash receipts did not import.

Since Integration Manager doesn't allow you to import just payment applications, we had to come up with a way to import the 2,800 AR payment applications.

A few years ago, I developed an eConnect integration that imported AR cash receipts and automatically applied them to open invoices. But since the client already had the payment application data, that was overkill--we just needed a way to import the applications without any additional fancy logic.

Rather than deal with a .NET application, I figured we could just use the eConnect RM Apply stored procedure--taRMApply. If we imported the AR payment application data into a SQL staging table, a SQL script could loop through the apply records and call the taRMApply procedure for each. Sounded easy, but I was afraid there would be a catch somewhere.

So I created an initial SQL script that would read the apply data from the staging table into a cursor, loop through that cursor, and call taRMApply. It turned out to be surprisingly simple and clean. And I was able to easily update each record of the staging table to indicate whether the apply was successful, and if not, record the error from eConnect.

Here is the SQL Script that I created, which I have also shared up on my OneDrive in case you want to download it instead.

The script assumes you have a staging table with a unique row ID, the necessary apply from and apply to values, and an imported flag field and an importstatus field. You can adjust the table names, fields, and field names to suit your needs.

One last note--the ErrorString output from the taRMApply procedure is going to be an error number. You can lookup that number in the DYNAMICS..taErrorCode table to get the related text error message.

You will want to test this yourself, and make sure to perform an initial test on a Test database first, but it appears to have worked well. It took maybe a minute or or two so to apply the 2,800 payments based on an initial test, so it appears to be fairly fast.

To my pleasant surprise, the process went smoothly, and other than a small error in the script that we quickly fixed, it worked well.

If you find any errors in the sample script below, or have any suggestions for improving it, please let me know.

/*

7/24/2014

Steve Endow, Precipio Services

Read RM payment application data from a staging table and apply payments to open invoices

sp_help RM20201

sp_help taRMApply

*/

DECLARE@RowIDINT

DECLARE@ApplyFromVARCHAR(21)

DECLARE@ApplyToVARCHAR(21)

DECLARE@ApplyAmountNUMERIC(19, 5)

DECLARE@ApplyFromTypeINT

DECLARE@ApplyToTypeINT

DECLARE@ApplyDateDATETIME

DECLARE@ErrorStateINT

DECLARE@ErrorStringVARCHAR(255)

SELECT@ApplyDate='2014-07-24'

--Get data from staging table

DECLAREApplyCursorCURSORFOR

SELECTRowID,ApplyFrom,ApplyTo,ApplyAmount,ApplyFromType,ApplyToTypeFROMstagingWHEREimported= 0

OPENApplyCursor

--Retrieve first record from cursor

FETCHNEXTFROMApplyCursorINTO@RowID,@ApplyFrom,@ApplyTo,@ApplyAmount,@ApplyFromType,@ApplyToType

WHILE@@FETCH_STATUS= 0

BEGIN

--Perform apply

EXECdbo.taRMApply

@I_vAPTODCNM=@ApplyTo,-- char(21)

@I_vAPFRDCNM=@ApplyFrom,-- char(21)

@I_vAPPTOAMT=@ApplyAmount,-- numeric

@I_vAPFRDCTY=@ApplyFromType,-- int

@I_vAPTODCTY=@ApplyToType,-- int

@I_vDISTKNAM= 0,-- numeric

@I_vWROFAMNT= 0,-- numeric

@I_vAPPLYDATE=@ApplyDate,-- datetime

@I_vGLPOSTDT=@ApplyDate,-- datetime

@I_vUSRDEFND1='',-- char(50)

@I_vUSRDEFND2='',-- char(50)

@I_vUSRDEFND3='',-- char(50)

@I_vUSRDEFND4='',-- varchar(8000)

@I_vUSRDEFND5='',-- varchar(8000)

@O_iErrorState=@ErrorStateOUTPUT,-- int

@oErrString=@ErrorStringOUTPUT-- varchar(255)

--Check for success

IF (@ErrorState= 0)

BEGIN

--If apply was successful

UPDATEstagingSETimported= 1,importstatus=''WHERERowID=@RowID

END

ELSE

BEGIN

--If apply failed

UPDATEstagingSETimported= 0,importstatus=@ErrorStringWHERERowID=@RowID

END

FETCHNEXTFROMApplyCursorINTO@RowID,@ApplyFrom,@ApplyTo,@ApplyAmount,@ApplyFromType,@ApplyToType

END

CLOSEApplyCursor

DEALLOCATEApplyCursor

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

↧

Product Recommendation: SQL Prompt by Red Gate Software

By Steve Endow

If you have been using SQL Server Management Studio for several years, you probably know that its Intellisense feature sometimes doesn't work. I've had it work well on some machines, intermittently on other machines, and on some servers, it just doesn't work at all no matter what I try. Even when it does work, it is sometimes slow and not always helpful.

While talking to the famous Victoria Yudin the other day, she showed me a handy tool that she uses called SQL Prompt, from the well known company Red Gate Software.

Victoria showed me how it provided fast, reliable, and very full featured Intellisense within SQL Management Studio. Here's an example.

It is very responsive, so as you type each character, it immediately displays and filters its results. And in addition to showing you the proposed object names for the word you are currently typing, it also displays additional related information--so in the example above, in addition to listing the RM tables, it displays the fields in RM20201 with their data types.

It also supports "snippets", which are shortcuts that are automatically converted into larger SQL statements with a press of the Tab key. In this example, if I type "ssf" and press Tab, it converts it to "SELECT * FROM" and then displays a list of tables.

This morning, when I was working on the eConnect AP apply script, I just typed "EXEC taRMApply" and it automatically wrote the parameters for the stored procedure.

That "auto code" feature right there saved me several minutes of tedious typing or copying and pasting of the parameters, and the resulting formatting is clean and easy to work with.

After trying it for just a few days, I'm a believer. But there are two small downsides. First, it isn't free--the license is currently $369. While this seems like a very reasonable price for such a powerful and refined tool, I can understand if that is a little expensive for a typical GP consultant or VAR.

The second issue is one that I face with several specialized tools that I use: Once you get used to using it and relying on it, you will be frustrated if you have to work on a server that doesn't have it, such as on a client's SQL Server. I use the UltraEdit text editor, and it drives me nuts when I don't have it on a machine and have to clean up several thousand rows of data.

But aside from those two small caveats, it looks like a great utility.

![]()

If you have been using SQL Server Management Studio for several years, you probably know that its Intellisense feature sometimes doesn't work. I've had it work well on some machines, intermittently on other machines, and on some servers, it just doesn't work at all no matter what I try. Even when it does work, it is sometimes slow and not always helpful.

While talking to the famous Victoria Yudin the other day, she showed me a handy tool that she uses called SQL Prompt, from the well known company Red Gate Software.

Victoria showed me how it provided fast, reliable, and very full featured Intellisense within SQL Management Studio. Here's an example.

It is very responsive, so as you type each character, it immediately displays and filters its results. And in addition to showing you the proposed object names for the word you are currently typing, it also displays additional related information--so in the example above, in addition to listing the RM tables, it displays the fields in RM20201 with their data types.

It also supports "snippets", which are shortcuts that are automatically converted into larger SQL statements with a press of the Tab key. In this example, if I type "ssf" and press Tab, it converts it to "SELECT * FROM" and then displays a list of tables.

After trying it for just a few days, I'm a believer. But there are two small downsides. First, it isn't free--the license is currently $369. While this seems like a very reasonable price for such a powerful and refined tool, I can understand if that is a little expensive for a typical GP consultant or VAR.

The second issue is one that I face with several specialized tools that I use: Once you get used to using it and relying on it, you will be frustrated if you have to work on a server that doesn't have it, such as on a client's SQL Server. I use the UltraEdit text editor, and it drives me nuts when I don't have it on a machine and have to clean up several thousand rows of data.

But aside from those two small caveats, it looks like a great utility.

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

↧

Have you registered for reIMAGINE 2014 yet?

By Steve Endow

Is there any spot on the planet more inviting, more bucolic, more exciting, or more of a global destination than Fargo, North Dakota in November?

Nope.

On November 9-12, 2014, the world will be watching as Dynamic Partner Connections hosts the reIMAGINE 2014 conference at the posh Holiday Inn Fargo, the premier resort and spa for Fargo travelers.

There will be dozens of sessions, jam packed with Dynamics GP goodness, Microsoft campus tours, and even a cocktail reception. Did I mention that the Holiday Inn has an indoor pirate ship water park? Seriously, no joke. Where else can you experience an indoor pirate ship? Imagine the stories you'll be able to tell when you get back from the conference.

My fun story is that at the GP Tech Airlift conference in Fargo two years ago, there were TWO different fake bomb threats. Yes, in Fargo. One was at Fargo's world famous Hector International Airport on the day before the conference, which prevented anyone from entering the airport and stopped all departing flights. Fortunately, they were letting people leave the airport, but the catch was that there were no rental cars, since nobody else could come back to the airport to return their rental cars! Luckily, I was getting a ride from a clever colleague who bribed the woman at the rental car counter, who then snuck us the keys for the last car on the lot--a top of the line minivan (aka the Fargo party bus). The second bomb threat was at North Dakota State University on the day after the conference. Fargo police blocked off a perimeter around the entire University for several hours, so we had to postpone our visit and come back after lunch. Even then, all of the doors were locked for another hour, so we had to hang out for a while until they got the all clear and let us go shop in the bookstore. Seriously, when was the last time you got to experience TWO bomb threats in one week? Didn't I mention "excitement"?

And you'll only be able to tell those captivating stories if you attend this exciting launch of the new dedicated Dynamics GP partner conference. There will be presentations for sales, marketing, consulting, and developer roles, so there will be something for everybody.

So register now at the reIMAGINE2014 web site. Then book your flight to scenic Fargo and make that hotel reservation.

I hope to see you there!

![]()

Is there any spot on the planet more inviting, more bucolic, more exciting, or more of a global destination than Fargo, North Dakota in November?

Nope.

On November 9-12, 2014, the world will be watching as Dynamic Partner Connections hosts the reIMAGINE 2014 conference at the posh Holiday Inn Fargo, the premier resort and spa for Fargo travelers.

There will be dozens of sessions, jam packed with Dynamics GP goodness, Microsoft campus tours, and even a cocktail reception. Did I mention that the Holiday Inn has an indoor pirate ship water park? Seriously, no joke. Where else can you experience an indoor pirate ship? Imagine the stories you'll be able to tell when you get back from the conference.

My fun story is that at the GP Tech Airlift conference in Fargo two years ago, there were TWO different fake bomb threats. Yes, in Fargo. One was at Fargo's world famous Hector International Airport on the day before the conference, which prevented anyone from entering the airport and stopped all departing flights. Fortunately, they were letting people leave the airport, but the catch was that there were no rental cars, since nobody else could come back to the airport to return their rental cars! Luckily, I was getting a ride from a clever colleague who bribed the woman at the rental car counter, who then snuck us the keys for the last car on the lot--a top of the line minivan (aka the Fargo party bus). The second bomb threat was at North Dakota State University on the day after the conference. Fargo police blocked off a perimeter around the entire University for several hours, so we had to postpone our visit and come back after lunch. Even then, all of the doors were locked for another hour, so we had to hang out for a while until they got the all clear and let us go shop in the bookstore. Seriously, when was the last time you got to experience TWO bomb threats in one week? Didn't I mention "excitement"?

And you'll only be able to tell those captivating stories if you attend this exciting launch of the new dedicated Dynamics GP partner conference. There will be presentations for sales, marketing, consulting, and developer roles, so there will be something for everybody.

So register now at the reIMAGINE2014 web site. Then book your flight to scenic Fargo and make that hotel reservation.

I hope to see you there!

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

↧

↧

C# is great, but can be very annoying

By Steve Endow

I just had a call to review an error that was occurring with an integration that I had developed.

The integration was adding records to a Dynamics GP ISV solution and while importing a large data file, it reported 6 errors. The ISV solution simply returned the generic .NET / SQL error indicating that a "string or binary data would be truncated."

The ISV has almost no documentation on their API, and the error message didn't tell us which field was causing the problem, so we reviewed the records that were getting the error, and saw that the customer names were very long. We assumed that a long company name was the problem. Looking at the ISV tables, I found that it only allowed 50 characters for the company name, unlike GP, which allows 65 characters.

Fine, so now that we found the likely issue, I had to modify my import to truncate the company name at 50 characters.

I opened my C# code and quickly added Substring(0, 50) to the company name string, and tested that change, wondering if it would work. C# developers will probably guess what happened.

"Index and length must refer to a location within the string"

Lovely. Okay, so off to Google to search for "C# truncate string". That search led me to this StackOverflow discussion of the topic, where I saw, thankfully, that I wasn't the only one with this question. Although I found a few solutions to the problem, what was very disappointing was that there was even a discussion of the topic and that there were multiple solutions to the problem.

Seriously? A modern business programming language can't just truncate a string? We have to measure the length of the string and make sure that we send in a valid length value? That's like selling someone a Corvette and then telling them they have to adjust the timing of the engine themselves if they want to go over 50 mph. Why in the world haven't they provided a means of natively truncating a string?

As one post on the StackOverflow thread points out, the Visual Basic Left function provides such functionality without throwing an error, so why does C# insist on making developers across the world all write their own quasi-functions to perform such rudimentary tasks?

How about the C# "Right" string function? Oh, you mean the one that doesn't exist? Ya, that one, where you have to create your own quasi-function, or yet again reference VisualBasic to perform a simple, obvious task.

It's just silly. A purist says something like what was mentioned on the StackOverflow thread: "That's probably why the member function doesn't exist -- it doesn't follow the semantics of the datatype." Seriously, that's the explanation or justification of why this modern language is wearing polyester bell bottoms? The semantics of the datatype? As in the string data type, where people actually need to truncate strings?

Don't get me wrong, I really like C# and now prefer it very much over VB, but when I come across annoying gaps in functionality like this, it just makes me shake my head.

![]()

I just had a call to review an error that was occurring with an integration that I had developed.

The integration was adding records to a Dynamics GP ISV solution and while importing a large data file, it reported 6 errors. The ISV solution simply returned the generic .NET / SQL error indicating that a "string or binary data would be truncated."

The ISV has almost no documentation on their API, and the error message didn't tell us which field was causing the problem, so we reviewed the records that were getting the error, and saw that the customer names were very long. We assumed that a long company name was the problem. Looking at the ISV tables, I found that it only allowed 50 characters for the company name, unlike GP, which allows 65 characters.

Fine, so now that we found the likely issue, I had to modify my import to truncate the company name at 50 characters.

I opened my C# code and quickly added Substring(0, 50) to the company name string, and tested that change, wondering if it would work. C# developers will probably guess what happened.

"Index and length must refer to a location within the string"

Lovely. Okay, so off to Google to search for "C# truncate string". That search led me to this StackOverflow discussion of the topic, where I saw, thankfully, that I wasn't the only one with this question. Although I found a few solutions to the problem, what was very disappointing was that there was even a discussion of the topic and that there were multiple solutions to the problem.

Seriously? A modern business programming language can't just truncate a string? We have to measure the length of the string and make sure that we send in a valid length value? That's like selling someone a Corvette and then telling them they have to adjust the timing of the engine themselves if they want to go over 50 mph. Why in the world haven't they provided a means of natively truncating a string?

As one post on the StackOverflow thread points out, the Visual Basic Left function provides such functionality without throwing an error, so why does C# insist on making developers across the world all write their own quasi-functions to perform such rudimentary tasks?

How about the C# "Right" string function? Oh, you mean the one that doesn't exist? Ya, that one, where you have to create your own quasi-function, or yet again reference VisualBasic to perform a simple, obvious task.

It's just silly. A purist says something like what was mentioned on the StackOverflow thread: "That's probably why the member function doesn't exist -- it doesn't follow the semantics of the datatype." Seriously, that's the explanation or justification of why this modern language is wearing polyester bell bottoms? The semantics of the datatype? As in the string data type, where people actually need to truncate strings?

Don't get me wrong, I really like C# and now prefer it very much over VB, but when I come across annoying gaps in functionality like this, it just makes me shake my head.

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

↧

Adding additional voucher fields to the Payables Check Remittance report

By Steve Endow

I just had a request to add an additional voucher field to the payables Check Remittance report. It has been a little while since I've had to do this type of modification in Report Writer, so it took me a few minutes to remember the process.

In this case, the client wanted the TRDISAMT field added to the remittance. That field is not available by default in the Check Remittance report field list, so we have to add the voucher table to the report. Sometimes additional tables can easily be added to the report, while in others, you have to join in a new table yourself.

First, insert the Check Remittance report as a Modified Report and click Open.

On the report Definition window, click on the Tables button.

On the Tables window, you should see 3 default tables for the report.

These three tables do not have any additional table relationships available--if you select one of the report tables and click New, the Related Tables window is empty.

To remedy this, we need to create a new Table Relationship. Looking at the three available tables on the report, the Temp table is not an option, and the Currency Setup is not a candidate. Our only hope is to join the voucher table to the Payment Apply To Work table.

Because the PM table names can get confusing, and because Report Writer inconsistently displays the Technical Name vs. the Display Name, I recommend using the Resources Description window in GP to confirm which table we need to work with.

The PM Payment Apply To Work File has a Technical Name of PM_Payment_Apply_WORK. We'll need to know this name for the next step.

So then head back into Report Writer and click on the Tables button, then select Tables.

In the Tables list, locate PM_Payment_Apply_WORK and click on the Open button.

In the Table Definition window, click on Relationships. We are basically going to tell Report Writer how to join the voucher table to the Payment Apply Work table.

Notice that there are no Table Relationships--this corresponds to what we saw in the report--there were no additional tables to select.

Click on the New button.

In the Table Relationship Definition window, you will select a Secondary Table. We want to add the PM20000 table, which is the PM Transaction OPEN File.

You will then choose Key2 as the Secondary Table Key, and link the Apply To Document Type and Apply To Voucher Number fields from the Apply To Work File. Make sure to use Apply To, and not Apply From. Then click on OK.

Congratulate yourself--you now have a new Table Relationship!

So now that you've done all of that work, you need to add the table to your modified report.

Open your modified report, click Tables, select the PM Payment Apply To Work File, then click New.

Select PM Transaction OPEN File and click OK. Then close the Report Tables Relationships window and click on Layout to open your report.

You should now see the PM Transaction OPEN table in the drop down list for your report, and can then add the Discount Amount field, or any additional voucher field, to your remittance report.

While this process did have several steps to add the new Table Relationship, be aware that the Dynamics GP reports vary dramatically in terms of complexity. Some reports are very complex, with much more involved tables and relationships that make it difficult or impossible to perform the joins required to add another table. So just be aware that certain report modifications could get very complex.

![]()

I just had a request to add an additional voucher field to the payables Check Remittance report. It has been a little while since I've had to do this type of modification in Report Writer, so it took me a few minutes to remember the process.

In this case, the client wanted the TRDISAMT field added to the remittance. That field is not available by default in the Check Remittance report field list, so we have to add the voucher table to the report. Sometimes additional tables can easily be added to the report, while in others, you have to join in a new table yourself.

First, insert the Check Remittance report as a Modified Report and click Open.

On the report Definition window, click on the Tables button.

On the Tables window, you should see 3 default tables for the report.

These three tables do not have any additional table relationships available--if you select one of the report tables and click New, the Related Tables window is empty.

To remedy this, we need to create a new Table Relationship. Looking at the three available tables on the report, the Temp table is not an option, and the Currency Setup is not a candidate. Our only hope is to join the voucher table to the Payment Apply To Work table.

Because the PM table names can get confusing, and because Report Writer inconsistently displays the Technical Name vs. the Display Name, I recommend using the Resources Description window in GP to confirm which table we need to work with.

The PM Payment Apply To Work File has a Technical Name of PM_Payment_Apply_WORK. We'll need to know this name for the next step.

So then head back into Report Writer and click on the Tables button, then select Tables.

In the Tables list, locate PM_Payment_Apply_WORK and click on the Open button.

In the Table Definition window, click on Relationships. We are basically going to tell Report Writer how to join the voucher table to the Payment Apply Work table.

Notice that there are no Table Relationships--this corresponds to what we saw in the report--there were no additional tables to select.

Click on the New button.

In the Table Relationship Definition window, you will select a Secondary Table. We want to add the PM20000 table, which is the PM Transaction OPEN File.

You will then choose Key2 as the Secondary Table Key, and link the Apply To Document Type and Apply To Voucher Number fields from the Apply To Work File. Make sure to use Apply To, and not Apply From. Then click on OK.

Congratulate yourself--you now have a new Table Relationship!

So now that you've done all of that work, you need to add the table to your modified report.

Open your modified report, click Tables, select the PM Payment Apply To Work File, then click New.

Select PM Transaction OPEN File and click OK. Then close the Report Tables Relationships window and click on Layout to open your report.

You should now see the PM Transaction OPEN table in the drop down list for your report, and can then add the Discount Amount field, or any additional voucher field, to your remittance report.

While this process did have several steps to add the new Table Relationship, be aware that the Dynamics GP reports vary dramatically in terms of complexity. Some reports are very complex, with much more involved tables and relationships that make it difficult or impossible to perform the joins required to add another table. So just be aware that certain report modifications could get very complex.

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

↧

How will Dynamics GP Document Attach affect my database size?

By Steve Endow

I've seen a few complaints about how the Dynamics GP Document Attach feature will cause chaos and havoc because documents are stored in the SQL Server database, rather than on the file system.

It seems that some people are envisioning massive database growth that will make Dynamics GP databases difficult to manage.

Is this a valid concern? Will document attachments cause a significant increase in database size? I think it depends.

First, let's consider the attachments. Before you can assess the impact on the database, you'll need to understand what you will be attaching.

Thinking about volume, how many documents will you attach per day, week, or month? Will you be attaching documents to master records like Customers? Or transactions like Sales Orders? Will you attach a document to every Customer record or every Sales Order transaction? Or only a portion of your records and transactions?

Next, what types of documents will you attach? Word documents? PDFs? Image files? How large will each file be?

Checking a few random samples of files I have handy, here are some sample file sizes I'm seeing.

12 page Word document: 178 KB

21 page Word document: 515 KB

1 page PDF of scanned grayscale document: 328 KB

3 page PDF of scanned grayscale document: 1,099 KB

1 page PDF of color web page printed from web browser: 30 KB - 300 KB (varied based on images in file)

Although the document sizes are relatively small, the sizes vary significantly, which is important if you need to store thousands of them.

Let's suppose that the typical document is a 1 page PDF of a scanned grayscale document, so I'll work with my 328 KB test file. (You may very well have a better scanner or better scanning software that produces smaller files, so you'll have to do some tests to determine your average file size.)

I'll then assume that these documents will be attached to 50% of my Sales Order transactions. Assuming that I process 100 Sales Orders per day, that will be 50 document attachments at 328 KB each. There is some additional overhead to storing the attachments in the database, but for now, let's just work with the actual file size on disk.

328,000 bytes x 50 = 16,400,000 = 16.4 megabytes per day

16.4 MB x 250 work days per year = 4.1 gigabytes per year

So in theory, based on the assumptions in my scenario, I will be storing an additional 4.1 gigabytes per year in my database. Is that a lot? I think that depends on the business, but in general, I would say that 4.1 GB shouldn't be a huge issue for most customers. A few considerations might be how that larger database will affect backup routines, or if you compress your backups, will they compress as well with the binary data from the attachments?

But there are certainly customers where it may be a problem. Consider large customers that have 5 or 10 very active Dynamics GP databases. If they have similar numbers of documents for all databases, that could mean 20 GB to 40 GB of documents per year. And if you consider that the customer may retain those documents for several years, you could end up with a significant amount of space consumed for document storage.

So yes, it is possible that Document Attach could result in significant database growth for certain larger or high volume customers.

However, I think this has to be considered in a larger context. Document Attach is an optional feature in Dynamics GP--you don't have to use it, and I suspect many customers will not. For customers that like the feature, if you don't need to attach a lot of documents and have simple requirements, it will probably work well. If you need to attach a lot of documents, or if you need to attach large documents, Document Attach may not be the best option for you. You certainly have other options, as there are plenty of document scanning and management solutions (including third party add-ons for GP), and those options will probably have several other features (indexing, searching, routing and workflow) that are valuable to you.

![]()

I've seen a few complaints about how the Dynamics GP Document Attach feature will cause chaos and havoc because documents are stored in the SQL Server database, rather than on the file system.

It seems that some people are envisioning massive database growth that will make Dynamics GP databases difficult to manage.

Is this a valid concern? Will document attachments cause a significant increase in database size? I think it depends.

First, let's consider the attachments. Before you can assess the impact on the database, you'll need to understand what you will be attaching.

Thinking about volume, how many documents will you attach per day, week, or month? Will you be attaching documents to master records like Customers? Or transactions like Sales Orders? Will you attach a document to every Customer record or every Sales Order transaction? Or only a portion of your records and transactions?

Next, what types of documents will you attach? Word documents? PDFs? Image files? How large will each file be?

Checking a few random samples of files I have handy, here are some sample file sizes I'm seeing.

12 page Word document: 178 KB

21 page Word document: 515 KB

1 page PDF of scanned grayscale document: 328 KB

3 page PDF of scanned grayscale document: 1,099 KB

1 page PDF of color web page printed from web browser: 30 KB - 300 KB (varied based on images in file)

Although the document sizes are relatively small, the sizes vary significantly, which is important if you need to store thousands of them.

Let's suppose that the typical document is a 1 page PDF of a scanned grayscale document, so I'll work with my 328 KB test file. (You may very well have a better scanner or better scanning software that produces smaller files, so you'll have to do some tests to determine your average file size.)

I'll then assume that these documents will be attached to 50% of my Sales Order transactions. Assuming that I process 100 Sales Orders per day, that will be 50 document attachments at 328 KB each. There is some additional overhead to storing the attachments in the database, but for now, let's just work with the actual file size on disk.

328,000 bytes x 50 = 16,400,000 = 16.4 megabytes per day

16.4 MB x 250 work days per year = 4.1 gigabytes per year

So in theory, based on the assumptions in my scenario, I will be storing an additional 4.1 gigabytes per year in my database. Is that a lot? I think that depends on the business, but in general, I would say that 4.1 GB shouldn't be a huge issue for most customers. A few considerations might be how that larger database will affect backup routines, or if you compress your backups, will they compress as well with the binary data from the attachments?

But there are certainly customers where it may be a problem. Consider large customers that have 5 or 10 very active Dynamics GP databases. If they have similar numbers of documents for all databases, that could mean 20 GB to 40 GB of documents per year. And if you consider that the customer may retain those documents for several years, you could end up with a significant amount of space consumed for document storage.

So yes, it is possible that Document Attach could result in significant database growth for certain larger or high volume customers.

However, I think this has to be considered in a larger context. Document Attach is an optional feature in Dynamics GP--you don't have to use it, and I suspect many customers will not. For customers that like the feature, if you don't need to attach a lot of documents and have simple requirements, it will probably work well. If you need to attach a lot of documents, or if you need to attach large documents, Document Attach may not be the best option for you. You certainly have other options, as there are plenty of document scanning and management solutions (including third party add-ons for GP), and those options will probably have several other features (indexing, searching, routing and workflow) that are valuable to you.

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

↧

Cool SQL Server Management Studio editing trick

By Steve Endow

I've been using SQL Server Management studio since long before it was called Management Studio, but I regularly stumble across some new feature or shortcut that I never knew existed.

Today I was querying several tables.

I then wanted to delete the contents of all of those tables. One trick I use is to hold down the ALT key to select a vertical column of text. I use this to highlight the "SELECT *" text on all of the lines at once.

I am then able to delete the selected text for all lines.

So that part I knew. But now I wanted to put the word DELETE at the beginning of each of the lines. Normally I would copy and paste it on every line. But this time, I just started to type the word DELETE.

As I typed, the characters appeared on all of the lines simultaneously! The thin gray line is the former selection area, and it essentially turns into a giant vertical cursor on all of the lines.

So I just typed the word DELETE once, and it appeared on all lines. Presto!

Pretty cool.

I just tested this technique in my UltraEdit text editor, and it worked there as well. It did not work in MS Word though.

http://www.precipioservices.com

![]()

I've been using SQL Server Management studio since long before it was called Management Studio, but I regularly stumble across some new feature or shortcut that I never knew existed.

Today I was querying several tables.

I then wanted to delete the contents of all of those tables. One trick I use is to hold down the ALT key to select a vertical column of text. I use this to highlight the "SELECT *" text on all of the lines at once.

I am then able to delete the selected text for all lines.

So that part I knew. But now I wanted to put the word DELETE at the beginning of each of the lines. Normally I would copy and paste it on every line. But this time, I just started to type the word DELETE.

As I typed, the characters appeared on all of the lines simultaneously! The thin gray line is the former selection area, and it essentially turns into a giant vertical cursor on all of the lines.

So I just typed the word DELETE once, and it appeared on all lines. Presto!

Pretty cool.

I just tested this technique in my UltraEdit text editor, and it worked there as well. It did not work in MS Word though.

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

↧

↧

Importing Analytical Accounting data using eConnect

By Steve Endow

I was recently asked for assistance to troubleshoot an eConnect integration that involved importing Dynamics GP transactions with Analytical Accounting distributions.

The user was able to import the transaction, but the imported transaction did not have the associated Analytical Accounting data in GP. There were a few mistakes in the import, and there were a few missing values that produced some misleading error messages from eConnect, so I thought I would briefly describe how to include Analytical Accounting data in an eConnect import.

First, make sure to fully review the information in the eConnect Programmer's Guide related to the taAnalyticsDistribution node. There are a few misleading elements and one inconsistency that may trip you up.

Let's review a few key fields in the help file:

DOCNMBR: This should be the Dynamics GP document number, such as the Journal Entry or Voucher Number. In the case of a PM voucher, this should not be the vendor's invoice / document number.

DOCTYPE: This is NOT the same numeric doc type value of the main transaction being imported. If you scroll to the bottom of the taAnalyticsDistribution help page, you will see a list of AA DOCTYPE values that you should use depending on your main transaction type.

For example, if you are importing a PM Voucher, the value should be zero, which is different than the DOCTYPE=1 you would use in your taPMTransactionInsert node.

DistSequence: If the GL accounts in your distributions are unique, this field is optional. If you have duplicate accounts in your distribution, you must supply this value, which means you will likely also need to assign the DistSequence value in your transaction distributions so that you can know the values and pass them to AA.

ACTNUMST: The GL account number for the distribution line related to this AA entry. I don't understand why this value is marked as Not Required. Obviously if you are sending in DistSequence, then ACTNUMST would be redundant, but I would recommend always populating this value.

As for the AA distribution values, you also need to ensure that you are passing in valid AA dimension and code values, that the combination is valid, and that the values can be used with the specified GL account. This is one thing that can make importing AA data so tedious--if the customer is regularly adding AA codes or GL accounts, an import may fail if everything isn't maintained and updated in GP. I import all of the AA data prior to importing, as it is much easier for the user than getting eConnect errors.

Here is a snippet of the AA code from a GL JE import I developed. As long as you properly assign the handful of AA values, it should be fairly straightforward.

aaDist.DOCNMBR = trxJrnEntry.ToString();

aaDist.DOCTYPE = 0; //0 = GL JE Transaction

aaDist.AMOUNT = Math.Abs(lineAmount);

aaDist.DistSequenceSpecified = true;

aaDist.DistSequence = lineSequence;

aaDist.ACTNUMST = lineAccountNumber;

aaDist.DistRef = lineDescription;

aaDist.NOTETEXT = longDescription;

aaDist.aaTrxDim = aaDimension;

aaDist.aaTrxDimCode = aaCode;

aaDists.Add(aaDist);

![]()

I was recently asked for assistance to troubleshoot an eConnect integration that involved importing Dynamics GP transactions with Analytical Accounting distributions.

The user was able to import the transaction, but the imported transaction did not have the associated Analytical Accounting data in GP. There were a few mistakes in the import, and there were a few missing values that produced some misleading error messages from eConnect, so I thought I would briefly describe how to include Analytical Accounting data in an eConnect import.

First, make sure to fully review the information in the eConnect Programmer's Guide related to the taAnalyticsDistribution node. There are a few misleading elements and one inconsistency that may trip you up.

Let's review a few key fields in the help file:

DOCNMBR: This should be the Dynamics GP document number, such as the Journal Entry or Voucher Number. In the case of a PM voucher, this should not be the vendor's invoice / document number.

DOCTYPE: This is NOT the same numeric doc type value of the main transaction being imported. If you scroll to the bottom of the taAnalyticsDistribution help page, you will see a list of AA DOCTYPE values that you should use depending on your main transaction type.

For example, if you are importing a PM Voucher, the value should be zero, which is different than the DOCTYPE=1 you would use in your taPMTransactionInsert node.

DistSequence: If the GL accounts in your distributions are unique, this field is optional. If you have duplicate accounts in your distribution, you must supply this value, which means you will likely also need to assign the DistSequence value in your transaction distributions so that you can know the values and pass them to AA.

ACTNUMST: The GL account number for the distribution line related to this AA entry. I don't understand why this value is marked as Not Required. Obviously if you are sending in DistSequence, then ACTNUMST would be redundant, but I would recommend always populating this value.

As for the AA distribution values, you also need to ensure that you are passing in valid AA dimension and code values, that the combination is valid, and that the values can be used with the specified GL account. This is one thing that can make importing AA data so tedious--if the customer is regularly adding AA codes or GL accounts, an import may fail if everything isn't maintained and updated in GP. I import all of the AA data prior to importing, as it is much easier for the user than getting eConnect errors.

Here is a snippet of the AA code from a GL JE import I developed. As long as you properly assign the handful of AA values, it should be fairly straightforward.

aaDist.DOCNMBR = trxJrnEntry.ToString();

aaDist.DOCTYPE = 0; //0 = GL JE Transaction

aaDist.AMOUNT = Math.Abs(lineAmount);

aaDist.DistSequenceSpecified = true;

aaDist.DistSequence = lineSequence;

aaDist.ACTNUMST = lineAccountNumber;

aaDist.DistRef = lineDescription;

aaDist.NOTETEXT = longDescription;

aaDist.aaTrxDim = aaDimension;

aaDist.aaTrxDimCode = aaCode;

aaDists.Add(aaDist);

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

↧

Useless Dynamics GP Error Messages, Episode #47

By Steve Endow

It's been quite a while since I've had to import package files into Dynamics GP, so when I got this error today when trying to import a large package file into GP 2013, it puzzled me for a few minutes.

Error: "VBA cannot be initialized. Cannot import this package because it contains VBA components."

If you search for this error, you will likely come across a Microsoft KB article saying that this error means that your VBA install is damaged and you have to fix it. Um, no, I don't think that's the issue, since I have three GP installs on this server, and all of the VBA customizations work fine, and I can import packages into those other GP installs.

So something else was up.

Thanks to the prolific Dynamics GP Community Forum, I found this thread from 2010 where a clever user recommended checking to see if Modifier was registered in GP. Hmmmm.

https://community.dynamics.com/gp/f/32/p/31214/72654.aspx

Sure enough, this was a new GP 2013 R2 install, and I hadn't yet entered reg keys. Once I entered the license keys and saved them, the package imported fine.

What a useless error message.

![]()

It's been quite a while since I've had to import package files into Dynamics GP, so when I got this error today when trying to import a large package file into GP 2013, it puzzled me for a few minutes.

Error: "VBA cannot be initialized. Cannot import this package because it contains VBA components."

If you search for this error, you will likely come across a Microsoft KB article saying that this error means that your VBA install is damaged and you have to fix it. Um, no, I don't think that's the issue, since I have three GP installs on this server, and all of the VBA customizations work fine, and I can import packages into those other GP installs.

So something else was up.

Thanks to the prolific Dynamics GP Community Forum, I found this thread from 2010 where a clever user recommended checking to see if Modifier was registered in GP. Hmmmm.

https://community.dynamics.com/gp/f/32/p/31214/72654.aspx

Sure enough, this was a new GP 2013 R2 install, and I hadn't yet entered reg keys. Once I entered the license keys and saved them, the package imported fine.

What a useless error message.

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

↧

Two gotchas when developing a COM component using C# and .NET 4

By Steve Endow

(Warning: This post is full-developer geek-out technical. Unless C#, COM, default constructors, and RegAsm are in your vocabulary, you may want to save this one for a future case of insomnia.)

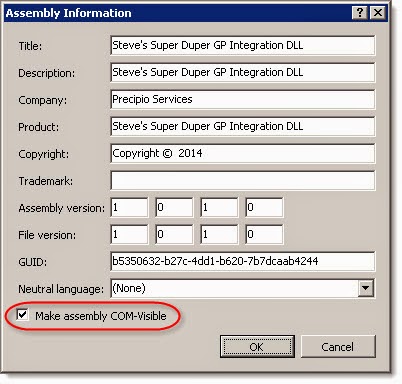

In the last year, I've had two customers request Dynamics GP integrations packaged up as COM DLLs so that their internal applications can call the DLL to import transactions into GP.

The benefit of this approach is that their internal application can control exactly when and how data is imported in GP. They don't have to wait for a scheduled import or some other external process to trigger the import.

The customer's internal application is creating the data, so they want to start the import as soon as the data is ready, call the import, and have their application verify that everything imported successfully. This process can produce a very tightly integrated and streamlined process.

Not a problem. For applications that are developed using something other than .NET, many support the older COM software interface standard. Fortunately, .NET makes it relatively easy to expose .NET assemblies as COM components. With newer versions of Visual Studio, it is just a single checkbox.

Very simple, right?

I previously developed a COM-Visible .NET DLL for a customer and that's all it took. But one difference for that project is that the customer asked that I use Visual Basic. With the Visual Basic DLL, the DLL registered fine and I had zero COM issues, somewhat to my surprise.

But this time, when my customer tried to register the C# DLL as a COM component, they received this error.

RegAsm : error RA0000 : Failed to load 'C:\path\import.dll' because it is not a valid .NET assembly

So, what was different? Why was the regasm command failing?

Well, after some puzzling, I realized that two things were different this time. First, I realized that I developed this DLL using .NET 4.0, whereas the last one was .NET 3.5. While that may not seem like a big deal, I now know that it makes all the difference. It turns out that with .NET 4, there is a different version of RegAsm.

So instead of this path for RegAsm.exe

C:\Windows\Microsoft.NET\Framework\v2.0.50727

You need to use this path with .NET 4:

C:\Windows\Microsoft.NET\Framework\v4.0.30319

Okay, so now that I had figured that out, and used the .NET 4 version of RegAsm, I received a different error.

RegAsm : warning RA0000 : No types were registered

Huh. I tried a few different options for RegAsm, but just couldn't get it to recognize the COM interface.

After some moderately deep Googling, I found a helpful post on Stack Overflow. The solution poster noted that with C#, you need to have a default constructor for COM. Um, okay, so...wait a minute, what? Huh?

And that's when I remembered. My prior COM project used VB, whereas this one was C#. Well, one of the things I learned during my transition from VB to C# was that VB essentially hides constructors from you. And looking at my VB code, it looks like I did a fair amount of digging and added the "Public Sub New()" method, which is required for a COM visible class.

With C#, it is similar, but the syntax is slightly different.

![]()

(Warning: This post is full-developer geek-out technical. Unless C#, COM, default constructors, and RegAsm are in your vocabulary, you may want to save this one for a future case of insomnia.)

In the last year, I've had two customers request Dynamics GP integrations packaged up as COM DLLs so that their internal applications can call the DLL to import transactions into GP.

The benefit of this approach is that their internal application can control exactly when and how data is imported in GP. They don't have to wait for a scheduled import or some other external process to trigger the import.

The customer's internal application is creating the data, so they want to start the import as soon as the data is ready, call the import, and have their application verify that everything imported successfully. This process can produce a very tightly integrated and streamlined process.

Not a problem. For applications that are developed using something other than .NET, many support the older COM software interface standard. Fortunately, .NET makes it relatively easy to expose .NET assemblies as COM components. With newer versions of Visual Studio, it is just a single checkbox.

Very simple, right?

I previously developed a COM-Visible .NET DLL for a customer and that's all it took. But one difference for that project is that the customer asked that I use Visual Basic. With the Visual Basic DLL, the DLL registered fine and I had zero COM issues, somewhat to my surprise.

But this time, when my customer tried to register the C# DLL as a COM component, they received this error.

RegAsm : error RA0000 : Failed to load 'C:\path\import.dll' because it is not a valid .NET assembly

So, what was different? Why was the regasm command failing?

Well, after some puzzling, I realized that two things were different this time. First, I realized that I developed this DLL using .NET 4.0, whereas the last one was .NET 3.5. While that may not seem like a big deal, I now know that it makes all the difference. It turns out that with .NET 4, there is a different version of RegAsm.

So instead of this path for RegAsm.exe

C:\Windows\Microsoft.NET\Framework\v2.0.50727

You need to use this path with .NET 4:

C:\Windows\Microsoft.NET\Framework\v4.0.30319

Okay, so now that I had figured that out, and used the .NET 4 version of RegAsm, I received a different error.

RegAsm : warning RA0000 : No types were registered

Huh. I tried a few different options for RegAsm, but just couldn't get it to recognize the COM interface.

After some moderately deep Googling, I found a helpful post on Stack Overflow. The solution poster noted that with C#, you need to have a default constructor for COM. Um, okay, so...wait a minute, what? Huh?

And that's when I remembered. My prior COM project used VB, whereas this one was C#. Well, one of the things I learned during my transition from VB to C# was that VB essentially hides constructors from you. And looking at my VB code, it looks like I did a fair amount of digging and added the "Public Sub New()" method, which is required for a COM visible class.

With C#, it is similar, but the syntax is slightly different.

publicclassImport

{

//Default constructor needed for COM

publicImport()

{

}

You just have to add an empty default constructor for the public class in order to make it COM visible.

Obvious, right? Not so much.

Now that I know, it's no big deal, but when I'm asked to do another COM visible component in a few years, I'm sure I'll forget. Hence this blog post!

Anyway, after adding the default constructor and recompiling, the DLL registered just fine.

Steve Endow is a Microsoft MVP for Dynamics GP and a Dynamics GP Certified IT Professional in Los Angeles. He is the owner of Precipio Services, which provides Dynamics GP integrations, customizations, and automation solutions.

↧

Management Reporter- Date Not Printing Correctly

You may have noticed that the default, in recent versions of Management Reporter, is for reports to open in the web viewer rather than the Report Viewer itself. Of course, you can change this setting (so that it defaults to the Report Viewer instead) in Report Designer, using Tools>>Options.

You may have noticed though, that the auto text for date (@DateLong) on a header or footer, returns a different result when opening the report in the web viewer or Report Viewer.

When opening a report in the web viewer, the date/time settings (that impact the format of the date presentation) will be pulled from the service account that runs the Management Reporter Process service. But when you generate a report and it opens in the Report Viewer, it will use your local user account's date/time settings.

So, if you plan to use the web viewer extensively you will want to make sure that the date/time settings are set in the profile of the account that runs the process service. The default time format, generally includes the day of the week- this is often the element that users want to remove so that the report does not say "Tuesday March 12th, 2019".

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a senior managing consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.![]()

You may have noticed though, that the auto text for date (@DateLong) on a header or footer, returns a different result when opening the report in the web viewer or Report Viewer.

When opening a report in the web viewer, the date/time settings (that impact the format of the date presentation) will be pulled from the service account that runs the Management Reporter Process service. But when you generate a report and it opens in the Report Viewer, it will use your local user account's date/time settings.

So, if you plan to use the web viewer extensively you will want to make sure that the date/time settings are set in the profile of the account that runs the process service. The default time format, generally includes the day of the week- this is often the element that users want to remove so that the report does not say "Tuesday March 12th, 2019".

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a senior managing consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.

↧

↧

Management Reporter- Columns Not Shading Properly

Sometimes the devil is in the details. Honestly, it seems like ALWAYS the devil is in the details. There are quite a few community and blog posts floating around regarding column shading in Management Reporter. So I thought I would summarize my recent experience with this, as it is/was a little trickier than expected.

First, yes, it is really is as easy as highlight the column in the column format and applying shading.

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a senior managing consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.

![]()

First, yes, it is really is as easy as highlight the column in the column format and applying shading.

After you do that, yes, it looks funny (meaning the whole column does not appear shaded). But that is okay, and NORMAL.

Now, here is where it gets tricky. If you have applied ANY formatting to your row (including indenting, highlighting, font change, etc), then the shading will not take effect. This is identified as a quality report with Microsoft, #352181. And unfortunately, there is no scheduled fix date yet. So the only workaround is to recreate the row format without the additional formatting.

Results when indenting is applied on the row format...note the rows that are indented are not shaded properly.

Row format with indenting...

So it seems, for now, you have to choose between formatting (and I mean ANY formatting, not just shading) on the row format -vs- shading on the column format based on your needs.

↧

Back to Basics- ODBC connections and third party products

We had an oddity a couple of months ago with a relatively well known third party product. Here were the symptoms...

Here's a blog post on how to set up an ODBC connection properly for GP (or let the installation automatically create the ODBC connection to avoid issues):

https://community.dynamics.com/gp/b/azurecurve/archive/2012/09/25/how-to-create-an-odbc-for-microsoft-dynamics-gp-2010.aspx

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a senior managing consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.![]()

- User could run a billing generation process when logged in on the server

- User could not run process when logged in to their local install

- SA could not run process when logged in to their local install

- Set up new user, still could not run process

- Happened from multiple workstations

- Spent hours and hours on phone with third party product, as it was perceived to be a code issue

Here's a blog post on how to set up an ODBC connection properly for GP (or let the installation automatically create the ODBC connection to avoid issues):

https://community.dynamics.com/gp/b/azurecurve/archive/2012/09/25/how-to-create-an-odbc-for-microsoft-dynamics-gp-2010.aspx

Christina Phillips is a Microsoft Certified Trainer and Dynamics GP Certified Professional. She is a senior managing consultant with BKD Technologies, providing training, support, and project management services to new and existing Microsoft Dynamics customers. This blog represents her views only, not those of her employer.

↧

Microsoft eliminates exams and certifications for Dynamics GP, NAV, SL, and RMS

By Steve Endow

Two weeks ago I was coordinating with a Dynamics GP partner to update my Dynamics GP certifications in conjunction with them renewing their MPN certification requirements.

But when one of the consultants tried to schedule an exam on the Prometric web site, the web site would not let her complete the registration process, saying that only vouchers could be used to pay for the Dynamics GP exam.

At the same time, we found some discrepancies on the Microsoft web site regarding the Dynamics GP certification requirements and MPN certification requirements, so the partner inquired with Microsoft. We were told that some changes were being made to the certification requirements, and that the updates would be communicated shortly.

Today we received an official announcement that Microsoft is eliminating the exams and certifications for most, but not all, of the Dynamics products.

https://mbs.microsoft.com/partnersource/northamerica/readiness-training/readiness-training-news/MSDexamreqchanges

I have mixed feelings about this change. A few years ago, Microsoft made such a big push for requiring Dynamics GP certification, and requiring all partners to have several certified consultants on staff. That produced dramatic changes in the partner channel that affected a lot of people. Eventually things settled down and the exams and certifications became routine.

This announcement appears to be a 180 degree shift from that prior strategy, and completely abandons exams and certifications. While this may open the market back up to smaller partners, I now wonder if consulting quality may decrease as a result. But this assumes that the exams and certifications mattered and actually improved consulting quality--I don't know how we could measure or assess that.

So, welcome to a new phase in the Microsoft Dynamics strategy.

What do you think? Is this a good thing? A bad thing? Neither?

![]()

Two weeks ago I was coordinating with a Dynamics GP partner to update my Dynamics GP certifications in conjunction with them renewing their MPN certification requirements.

But when one of the consultants tried to schedule an exam on the Prometric web site, the web site would not let her complete the registration process, saying that only vouchers could be used to pay for the Dynamics GP exam.

At the same time, we found some discrepancies on the Microsoft web site regarding the Dynamics GP certification requirements and MPN certification requirements, so the partner inquired with Microsoft. We were told that some changes were being made to the certification requirements, and that the updates would be communicated shortly.

Today we received an official announcement that Microsoft is eliminating the exams and certifications for most, but not all, of the Dynamics products.